Machine Learning

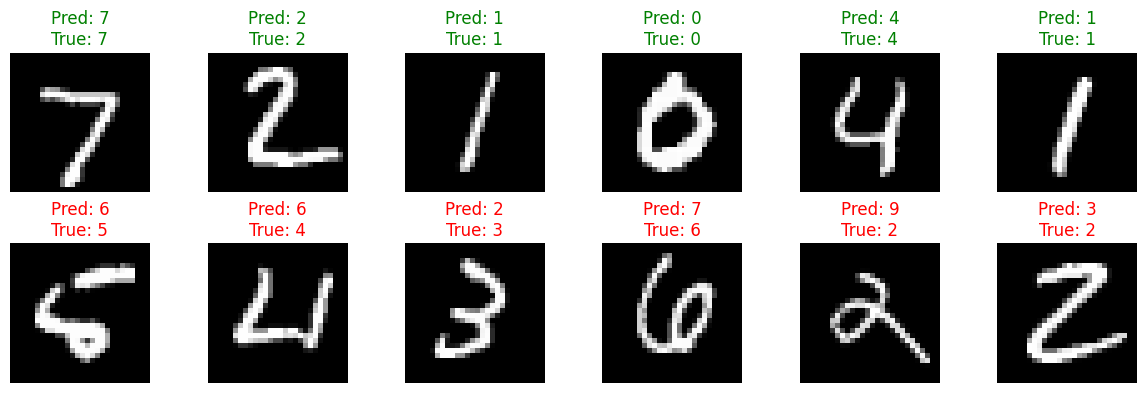

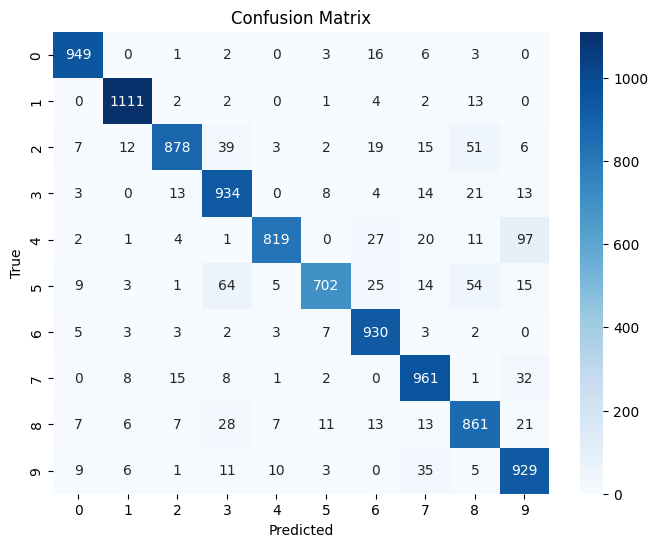

homeBuilt a complete machine learning pipeline in Python to train a linear classifier model on the MNIST dataset, applying fundamental ML concepts including cross-entropy loss, backpropagation, and bias-variance tradeoff. The model achieved 90% accuracy. Leveraged PyTorch for model architecture and training, managing DataLoaders, and implementing L2 regularization via weight decay. Conducted greedy 1D hyperparameter search across learning rate, weight decay, and batch size to optimize model performance. Applied ML best practices including GPU acceleration (CUDA/Apple Silicon MPS), fixed-seed reproducibility, and train/validation/test splitting. Visualized results using Matplotlib and Seaborn, including loss/accuracy curves and a confusion matrix. Documented the full mathematical foundation from logit computation to the SGD weight update rule in a Jupyter Notebook.